So, we’re butting heads up against the storage capacity of our Netgear Stora again (93% full). The NAS currently has 2 x 2TB drives and no more free bays to drop drives into, so whatever the next arrangement is it has to involve getting rid of at least one of the current drives. The Stora is currently backed up to an external drive enclosure with a 4TB drive mounted in it. Other things are also backed up on that external drive, so it’s more pressed for space than the Stora.

So here’s the plan:

- collect underpants

This was a flippant comment, but it’s upgrade season and we recently acquired a computer second hand, which had an i5-3470S CPU, the most powerful thing in the house by a significant margin. I wanted the dual Display Port outputs, but unfortunately it could only be upgraded to 8GB of RAM, so instead the CPU got swapped into our primary desktop (and a graphics card acquired to run dual digital displays). Dropping in a replacement CPU required replacing the thermal grease, and that meant a rag to wipe off the old grease, thus the underpants. - backup the Stora to the 4TB drive

- acquire a cheap 8TB disk because this is for backing up, not primary storage

- clone the 4TB drive onto it using Clonezilla

- expand the cloned 4TB partition to the full 8TB of drive space

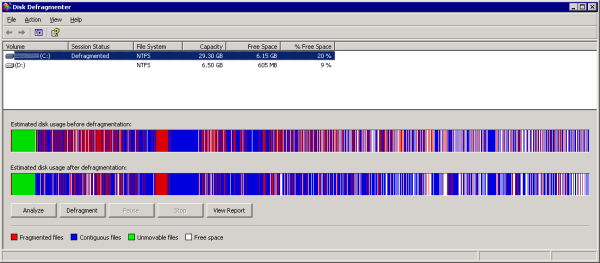

Well, that didn’t work. Clonezilla didn’t seem to copy the data reliably, but admittedly I was running a stupidly old version. Several hours of mucking around with SATA connectors and Ubuntu NTFS drivers later, I gave up and copied the disk using Windows. It took several days, even using USB3 HDD enclosures, which is why I spent so much time mucking around trying to avoid it. - backup the Stora to the 8TB drive

- remove the 2 x 2TB drives from the Stora

- insert the 4TB drive into the Stora

- allow the Stora to format the 4TB drive

- pull the 4TB drive

- mount the 4TB and 2 x 2TB drives in a not-otherwise-busy machine

- copy the data from the 2 x 2TB drives onto the 4TB drive

- reinsert the 4TB drive into the Stora

- profit!

And, by profit, I mean cascade the 2TB drives into desktop machines that have 90% full 1TB drives… further rounds of disk duplication ensue. 1TB drives then cascade to other desktop machines, further rounds of disk duplication ensue.

At the end of this process, the entire fleet will have been upgraded. But the original problem of butting heads against the Stora will not have been addressed; this will hopefully a simple matter of dropping another drive in.

The last time we did this, we paid $49.50/TB for storage. This time around, it was $44.35; a 10% drop in storage prices isn’t anything to write home about in a four-and-a-half year window.