The terrible events in London overnight do have some relevance to us as humble IT workers. While there are many critical jobs performed in such situations by the emergency services, communications and other systems are also important.

The terrible events in London overnight do have some relevance to us as humble IT workers. While there are many critical jobs performed in such situations by the emergency services, communications and other systems are also important.

Obviously top of the pile in this respect are the systems dealing with the emergency services themselves: their communications and despatch systems — and we know that mobile phone networks were affected by the chaos. A few notches down, but growing more significant, are the web sites (and background systems that feed them) to inform the public.

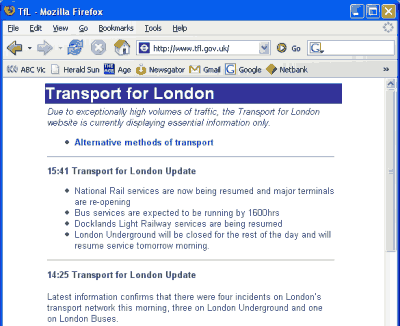

While the BBC News web site seemed to generally cope as events unfolded (I’m sure they’re well-versed at this kind of incident), their live video and audio streams were swamped. Likewise CNN responded okay, though ITN was sluggish. The Transport For London site didn’t respond for some time, before they switched to a plainer, less server-intensive basic information page.

Last week Connex in Melbourne suffered a shutdown, and similarly, their web site didn’t cope. While most disruptions are also communicated to SMS subscribers, the shutdown itself was caused by problems with the same systems used for sending out the alerts. Melbourne’s public transport umbrella site Metlink was responding, but the problem there was a lack of updates.

As the web becomes more pervasive, and media outlets also use it to gather information, capacity planning for peak demand becomes important. Obviously no organisation wants to spend up big on servers that never get used, but for mass communication of detailed information, the web is cheaper than employing operators or even installing masses of phone lines, and will play an increasing role in keeping the general public informed of events.

Another problem is scalability. The Wikinews article on Coordinated terrorist attack in London was uneditable at times due to edit conflicts.

The BBC site kept timing out for me, almost every page I wanted I had to try two or three times. I couldn’t connect to the Radio 4 live feed at all. Even Australian news sites (news.com and smh) were slow, even before the tv started their live broadcast.

A few years ago, I used to work for a large news/entertainment website (not in Australia). The pages were served by a set of web servers, all of them with the same content, with http://www…..com pointing to several IP addresses. With time, we decided to experiment with load-balancing switches; these are switches that sit in front of the web server, receive the HTTP requests and pass them on to the best server (bypassing any servers that are down or too slow). After some months using a small switch made by Cisco, it was felt that we would outgrow them soon, and we started checking new options.

We eventually got the guys from Alteon to agree on a live test, and scheduled to replace the Cisco switch with an Alteon one (on loan) for 24 hours, so we could see how it would react. We got to the office very early in the morning, replaced the switch and watched.

The date this happened? Sept. 11, 2001.

The Alteon switch did not handle the extra load very well; the Cisco one, after we put it back, did. It was mostly a matter of fine-tuning, and we eventually moved to Alteon. Still, as a testing day, we couldn’t have made a better choice…

More on this from the BBC : http://news.bbc.co.uk/1/hi/technology/4663423.stm

That’s the link I was thinking of Tony. Interesting to read this kind of thing. On Thursday, I found it easier to look at US sites for any streaming media, though BBC was OK for the static news updates. By early afternoon, I was able to get a reliable News 24 webcast on BBC.